RankIQA: Learning from Rankings for No-reference Image Quality Assessment

The paper will appear in ICCV 2017. An arXiv pre-print version and the supplementary material are available.

ICCV 2017 open access is available and the poster can be found here.

The updated version is accepted at IEEE Transactions on Pattern Analysis and Machine Intelligence. Here is arXiv pre-print version.

Citation

Please cite our paper if you are inspired by the idea.

@InProceedings{Liu_2017_ICCV,

author = {Liu, Xialei and van de Weijer, Joost and Bagdanov, Andrew D.},

title = {RankIQA: Learning From Rankings for No-Reference Image Quality Assessment},

booktitle = {The IEEE International Conference on Computer Vision (ICCV)},

month = {Oct},

year = {2017}

}

and

@ARTICLE{8642842,

author={X. {Liu} and J. {Van De Weijer} and A. D. {Bagdanov}},

journal={IEEE Transactions on Pattern Analysis and Machine Intelligence},

title={Exploiting Unlabeled Data in CNNs by Self-supervised Learning to Rank},

year={2019},

pages={1-1},

doi={10.1109/TPAMI.2019.2899857},

ISSN={0162-8828}, }

Authors

Xialei Liu, Joost van de Weijer and Andrew D. Bagdanov

Institutions

Computer Vision Center, Barcelona, Spain

Media Integration and Communication Center, University of Florence, Florence, Italy

Abstract

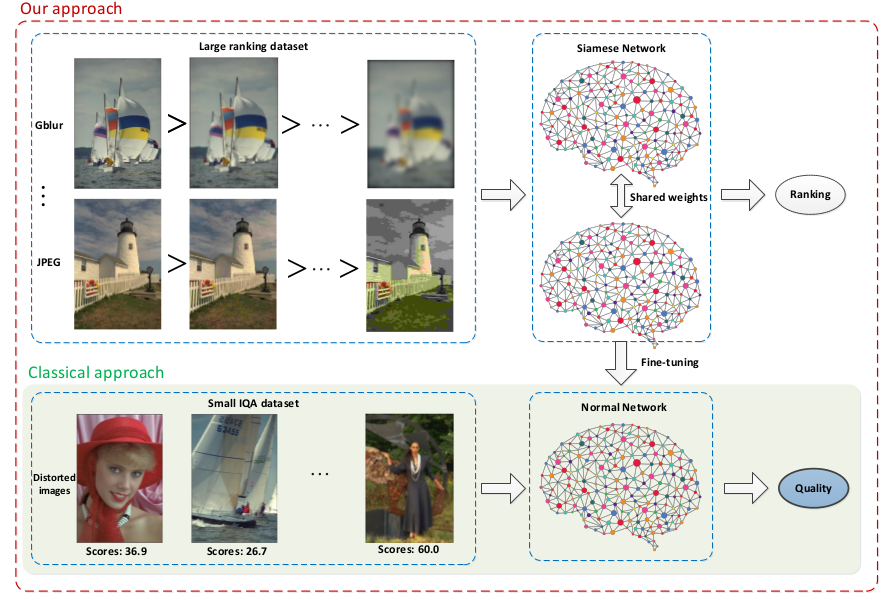

We propose a no-reference image quality assessment (NR-IQA) approach that learns from rankings (RankIQA). To address the problem of limited IQA dataset size, we train a Siamese Network to rank images in terms of image quality by using synthetically generated distortions for which relative image quality is known. These ranked image sets can be automatically generated without laborious human labeling. We then use fine-tuning to transfer the knowledge represented in the trained Siamese Network to a traditional CNN that estimates absolute image quality from single images. We demonstrate how our approach can be made significantly more efficient than traditional Siamese Networks by forward propagating a batch of images through a single network and backpropagating gradients derived from all pairs of images in the batch. Experiments on the TID2013 benchmark show that we improve the state-of-the-art by over 5%. Furthermore, on the LIVE benchmark we show that our approach is superior to existing NR-IQA techniques and that we even outperform the state-of-the-art in full-reference IQA (FR-IQA) methods without having to resort to high-quality reference images to infer IQA.

Models

The main idea of our approach is to address the problem of limited IQA dataset size, which allows us to train a much deeper CNN without overfitting.

Framework

All training and testing are done in Caffe framework.

Pre-trained models

The pre-trained models are available to download.

Datasets

Ranking datasets

Using an arbitrary set of images, we synthetically generate deformations of these images over a range of distortion intensities. In this paper, the reference images in Waterloo and the validation set of the Places2 are used as reference images. The details of generated distortions can be found in supplementary material. The source code can be found in this folder.

IQA datasets

We have reported experimental results on different IQA datasets including TID2013, LIVE, CSIQ, MLIVE.

Training

The details can be found in src.

RankIQA

Using the set of ranked images, we train a Siamese network and demonstrate how our approach can be made significantly more efficient than traditional Siamese Networks by forward propagating a batch of images through a single network and backpropagating gradients derived from all pairs of images in the batch. The result is a Siamese network that ranks images by image quality.

RankIQA+FT

Finally, we extract a single branch of the Siamese network (we are interested at this point in the representation learned in the network, and not in the ranking itself), and fine-tune it on available IQA data. This effectively calibrates the network to output IQA measurements.